Aaron Vidal

23 | Interdisciplinary Creative

In scarcity we value tools, in abundance we value taste

📍 South London, United Kingdom

💼 Hardware Engineer @ Airbus Space

🎓 BEng Electronic Engineering (First Class Honors)

✉️ aaron@vidalion.co

💼 Experience

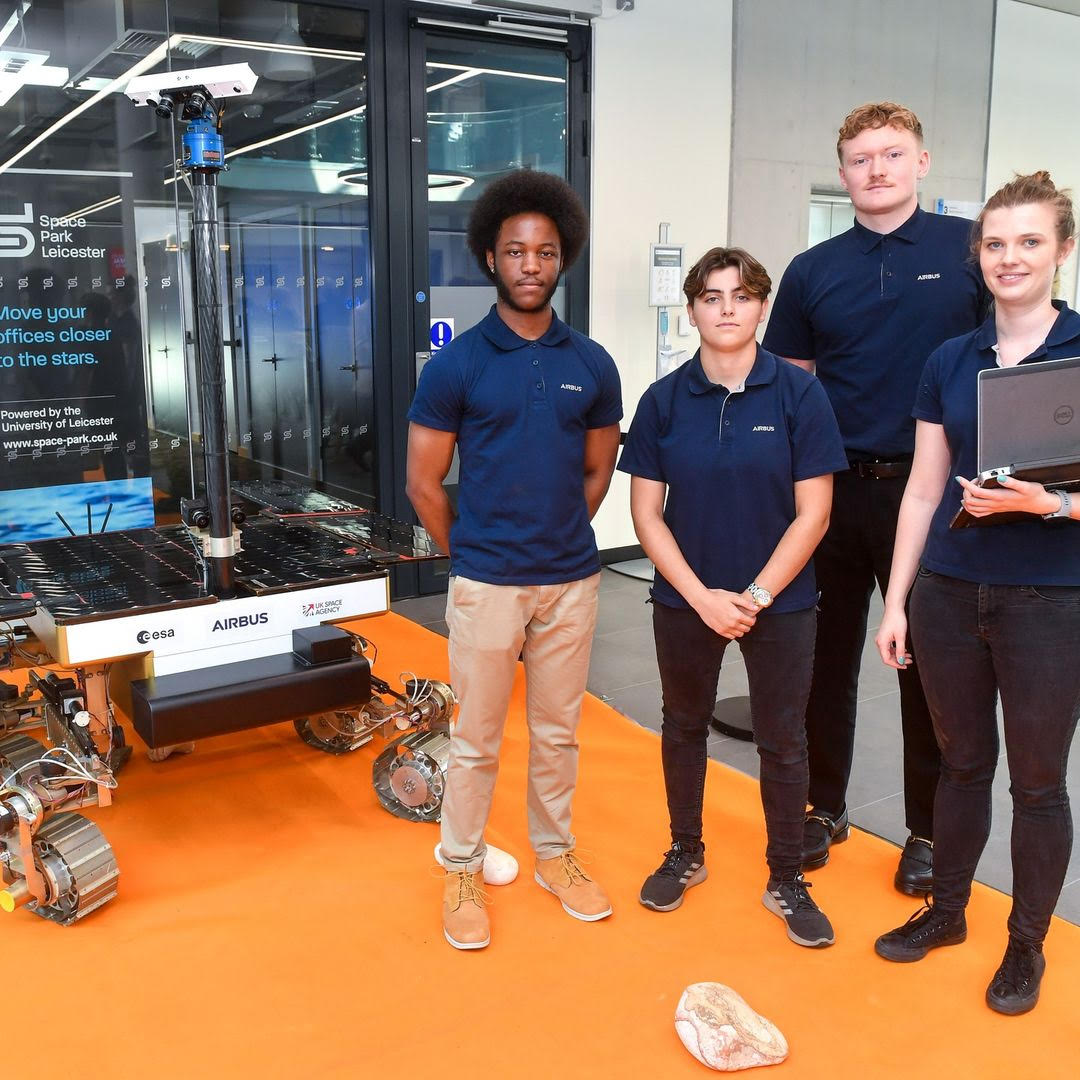

Hardware Engineer @ Airbus Space

2021 - Present

- Secure Communication Systems: Design, development, and testing of satellite modems for secure communication

- Embedded Systems: Built cost-effective hardware simulators, achieving 98% savings

- Collaboration: Projects with European Space Agency and TÜV engineers for testing and validation

- Innovation: Led 3D-printed diorama designs for Proteus Systems and proposed NLP based solutions

- Outreach: Promoting STEM education through partnerships with Space Park Leicester and others

STEM Outreach & Science Communication

2021 - Present

Growing up, I benefited from many STEM outreach programmes, so I now prioritise paying it forward. As part of Airbus' STEM outreach team, I attend events to inspire students from underrepresented backgrounds, partnering with organisations like Motivez, NHS Careers, Solent University, and local schools.

Highlights include teaching drone design at Mayfield School and linking astrophysics to Airbus at Priory School. Seeing the same spark of curiosity in others (that I still feel) is always energising and rewarding.

Freelance Computer Aided and Graphic Design

2019 - Present

Communication with global clients across language barriers.

Adobe Photoshop, Adobe Illustrator, Fusion 360 and Blender.

🧠 Projects

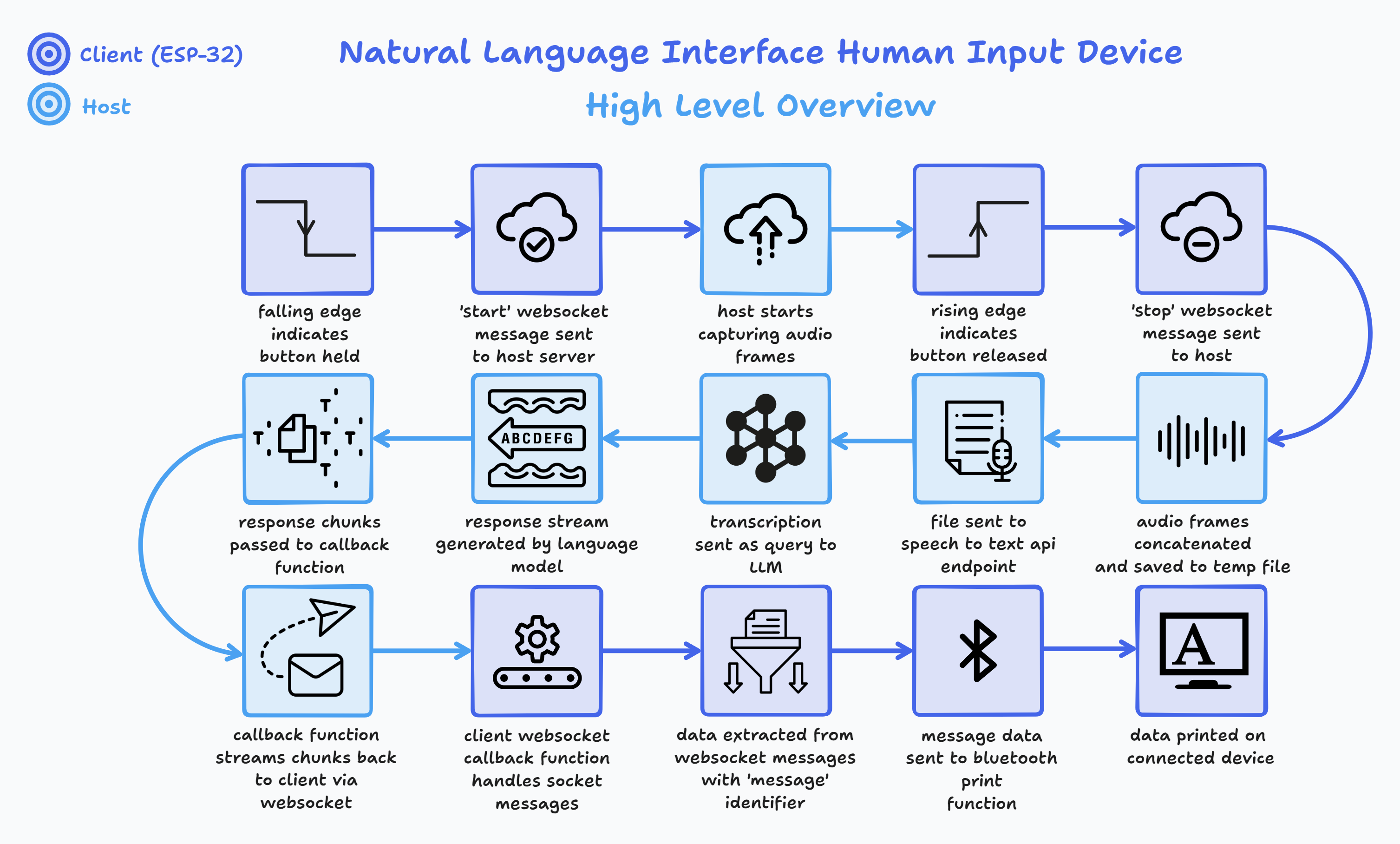

💬 Natural Language Human Input Device 2025(Ongoing)

Towards Platform Agnostic Device Control

Proposed as a candidate solution in my dissertation, this device aims to serve as a universal remote for any Bluetooth-enabled device by mimicking human input via natural language, utlising the BleKeyboard.h library.

"What I cannot create, I do not understand." - Richard Feynman

This project is a continuation of pageR and my exploration into handheld embodied intelligence hardware.

Serving as a development platform for research into the application of IMUs, MEMS, SPI and I2S for improving HCI.

At the dawn of the "AI Agent" it is particually important to consider how we design new systems and workflows to accomodate them. I invision a plurality of devices akin to an orchestral batons used to conduct agent activities using natural language (or more novel modalities).

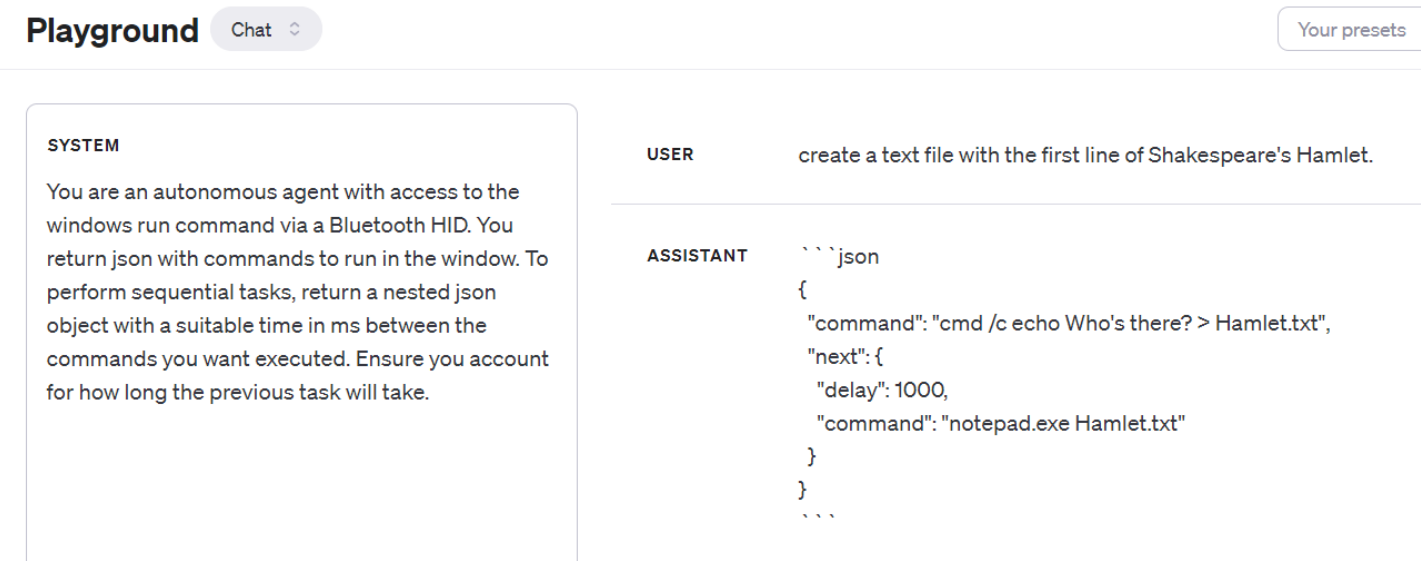

Process Flow Diagram

Example of Language Model Logic, JSON parsed in 'data extraction' step

Objectives- implementing "push to talk" llm inference continuing on from pageR

- speech > stt > intent > bluetooth input

- inertial measurement unit as mouse input

- deep learning to characterise imu motion tx over websocket

- flashing firmware (on demand) ota with esptool.py via raspberry pi

- "reimagining the pager" using the beeper protocol to redirect priority messages to the device - a truly personal assistant

- [aspirational] implimenting functionality using quantised models on FPGA in VHDL/Verilog for low power offline inference

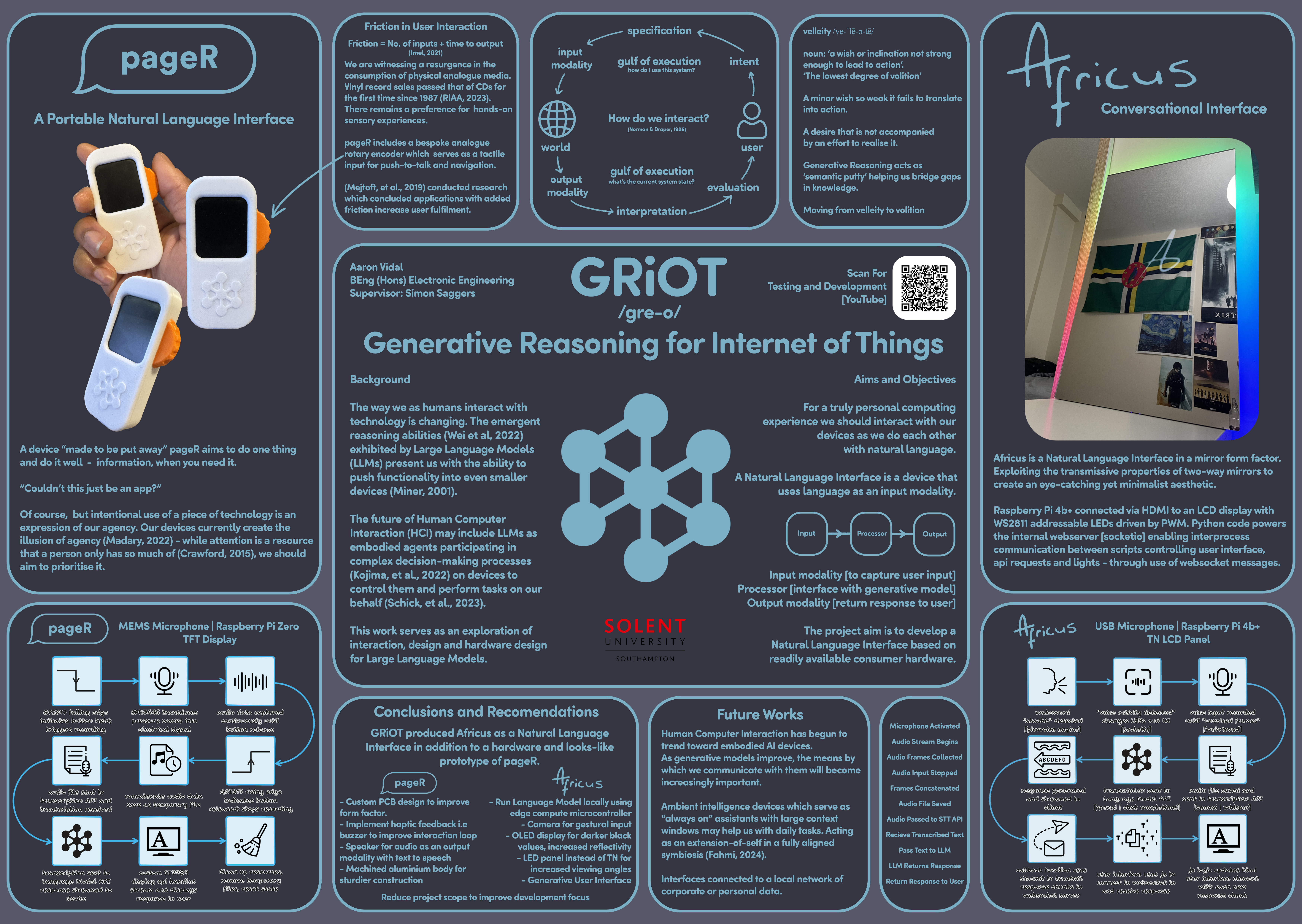

GRiOT

2024

GRiOT

2024

Generative Reasoning for Internet of Things

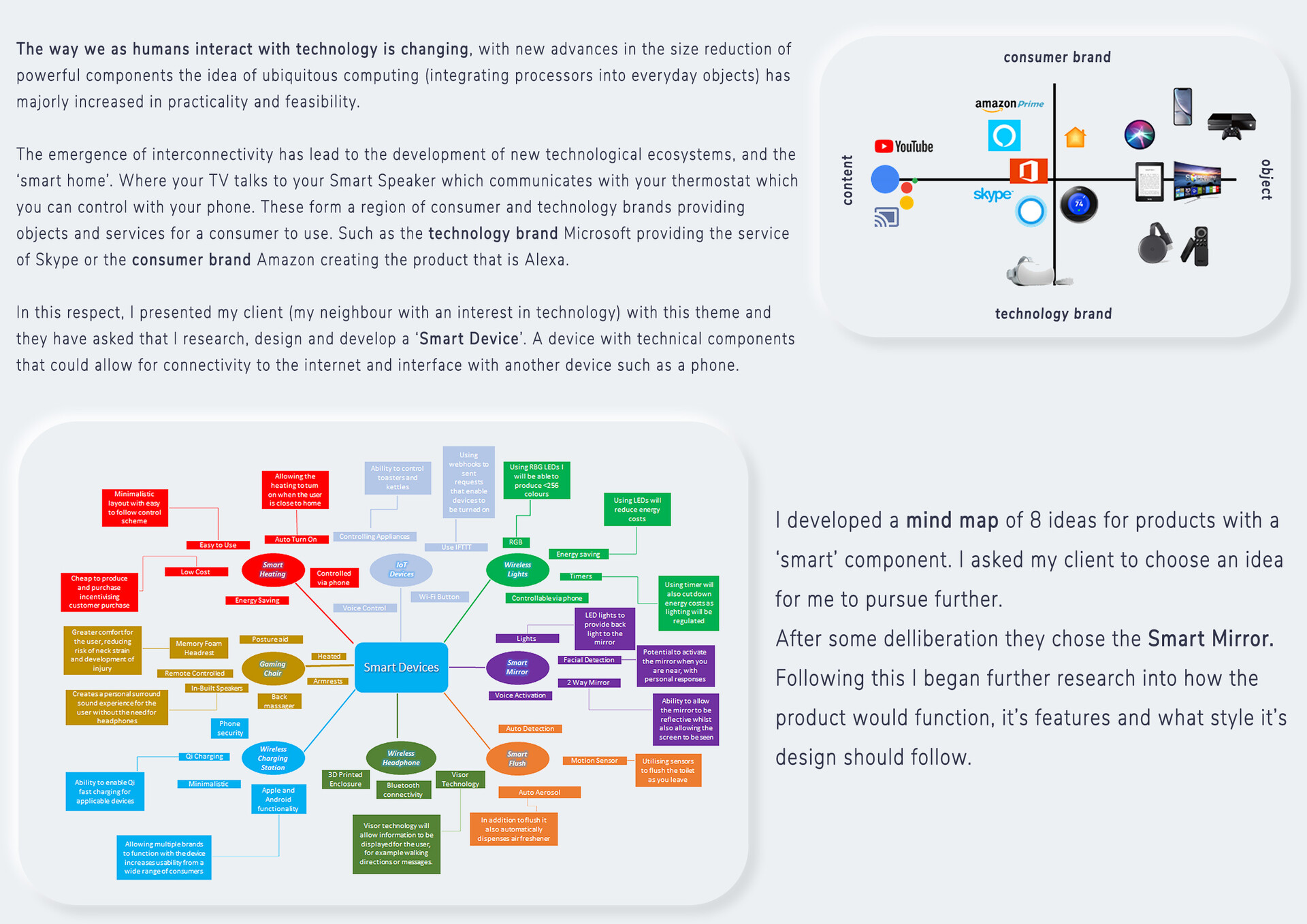

The way we as humans interact with technology is changing. The emergent reasoning abilities exhibited by Large Language Models (Wei et al., 2022) present us with the ability to push functionality into even smaller devices (Miner, 2001). The future of Human Computer Interaction (HCI) may include LLMs as embodied agents participating in complex decision-making processes (Kojima et al., 2022) on devices to control them and perform tasks on our behalf (Schick et al., 2023).

Incorperating software and hardware research to explore the evolving landscape of Human-Machine Interaction (HMI).

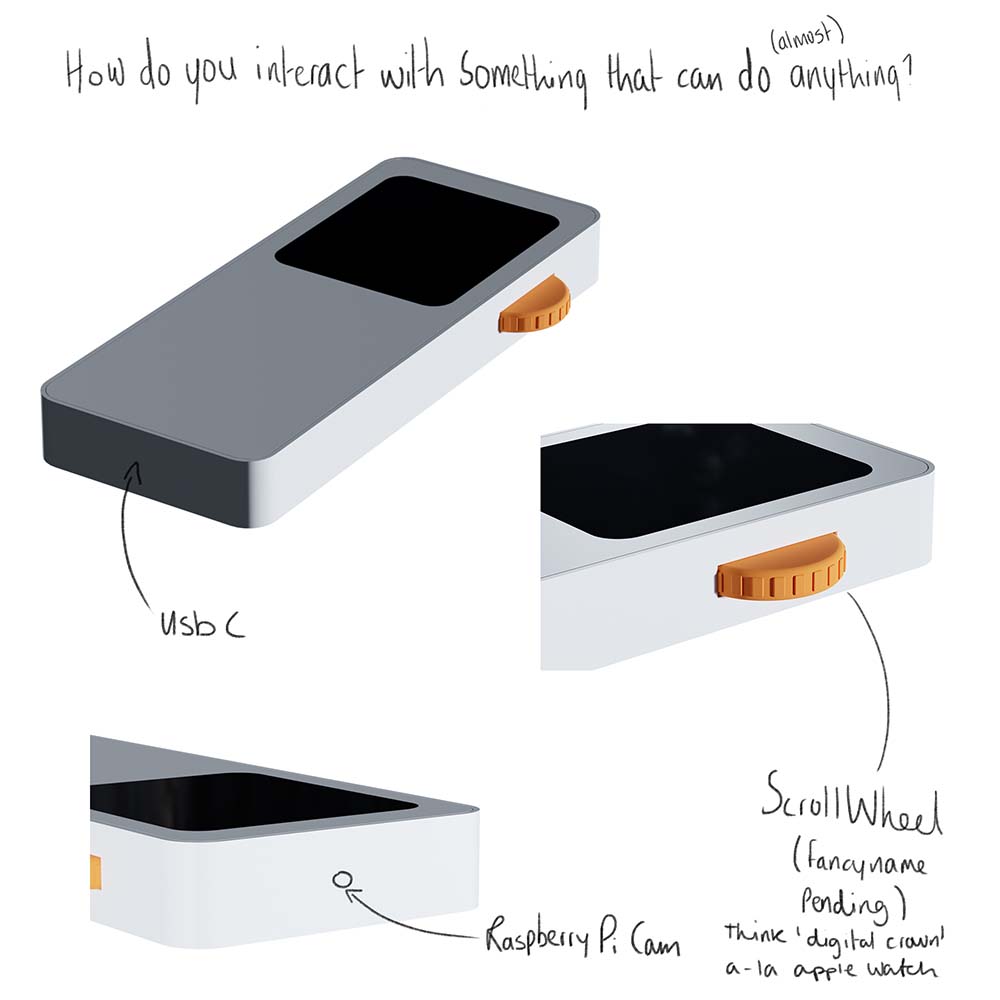

I posed a simple question:

"What is the most effective way to interact with something that can do (almost) anything?"

Read more about the project objectives.

Through this lens, I investigated language as a primary mode of interaction, culminating in the design and implementation of two natural language interfaces developed on the Raspberry Pi platform: Africus and Pager

Read the full dissertation, ask the chatbot to summarise the project.or click the play button above to listen to an AI Deepdive (created with NotebookLM).

Drawing inspiration from my West African heritage, the project takes its name from the Griot — an oral historian and storyteller vital to preserving the collective knowledge of a village. In much the same way, Large Language Models (LLMs) encode a distilled essence of human history and culture within their weights. Learn more about the Griot tradition.

GRiOT Project Poster [view full resolution]

The GRiOT project considers the role of friction in user interfaces and its impact on user agency and engagement. It explores how deliberate introduction or removal of friction influences the interaction experience, aligning with concepts like the "Gulf of Execution and Evaluation." Additionally, GRiOT discusses generative interfaces, showcasing how natural language systems bridge the gap between human intent and machine capabilities, enabling intuitive interaction without prior technical expertise. The project also touches on the physical embodiment of AI, examining how tangible interfaces, such as smart mirrors or handheld devices, reshape perceptions of technology and encourage a sense of familiarity, moving away from the ‘black box’ narrative.

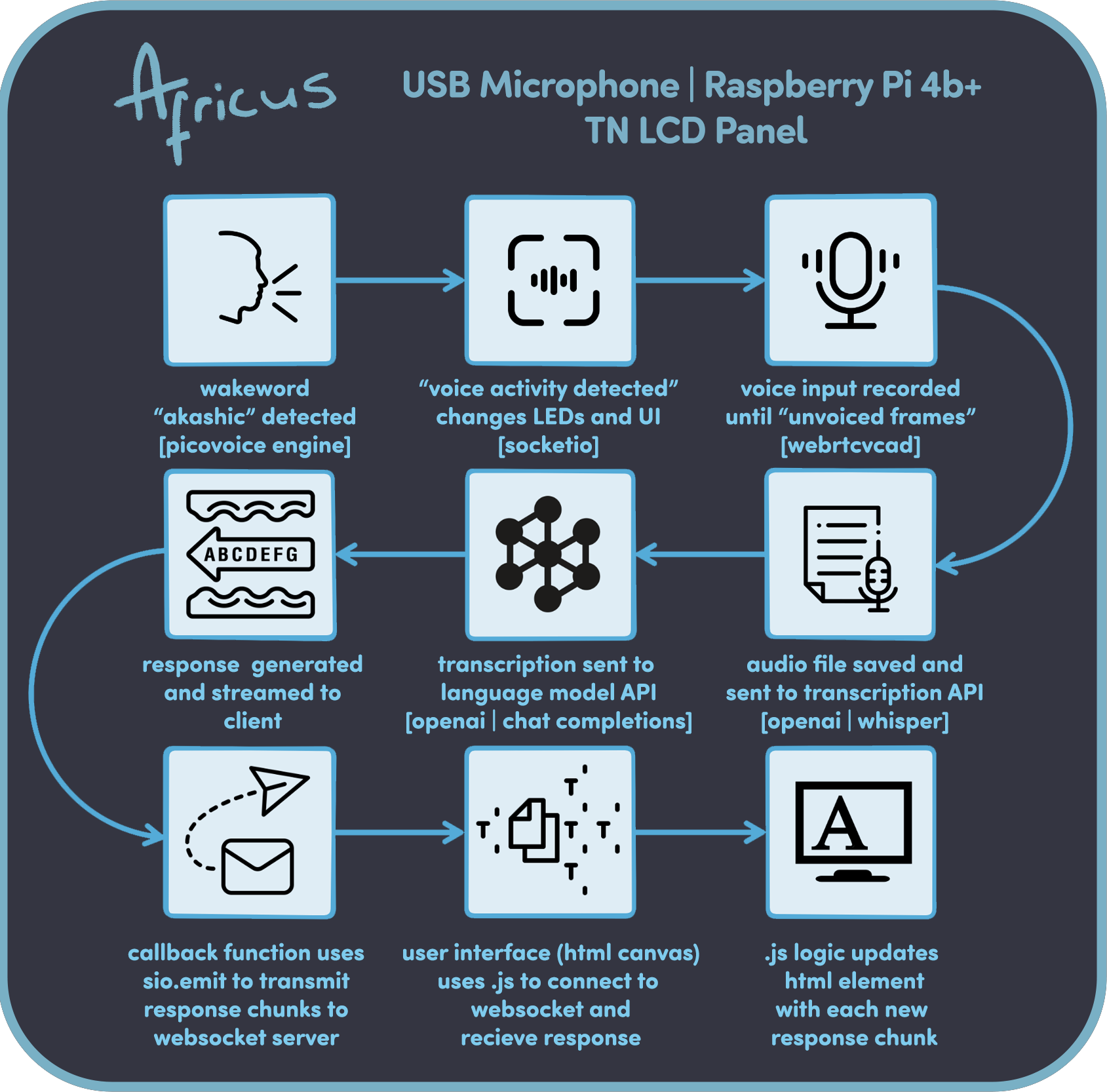

Africus 2023

Africus 2023

Spiritual successor to my A-Level Product Design Coursework (left in limbo due to covid).

Africus (named after the Africus Monolith from Stanley Kubrick's 2001: A Space Odyssey) is a voice-first Natural Language Interface in a Smart Mirror form Factor, providing an amazing canvas for post-WIMP UX design1.

Created with a Raspberry Pi 4B+ and Custom Python WebSocket Framework.

The assistant is evoked with the wake word "Akashic," a reference to the esoteric concept from mysticism known as the Akashic Records—a compendium of all events, actions, thoughts, feelings, and intentions that have occurred in the past, present, and future.

While I don't believe language models can explicitly predict the future2, their training data encompasses a vast array of information up until their knowledge cutoff, enabling them to provide insightful and informed responses based on existing patterns and data.

July 2023

April 2024

Process Flow

Africus was showcased at the Solent University Expo in 2023, where it received positive feedback from attendees and industry professionals. The project was also displayed at the Airbus Space Apprentice Showcase of the same year.

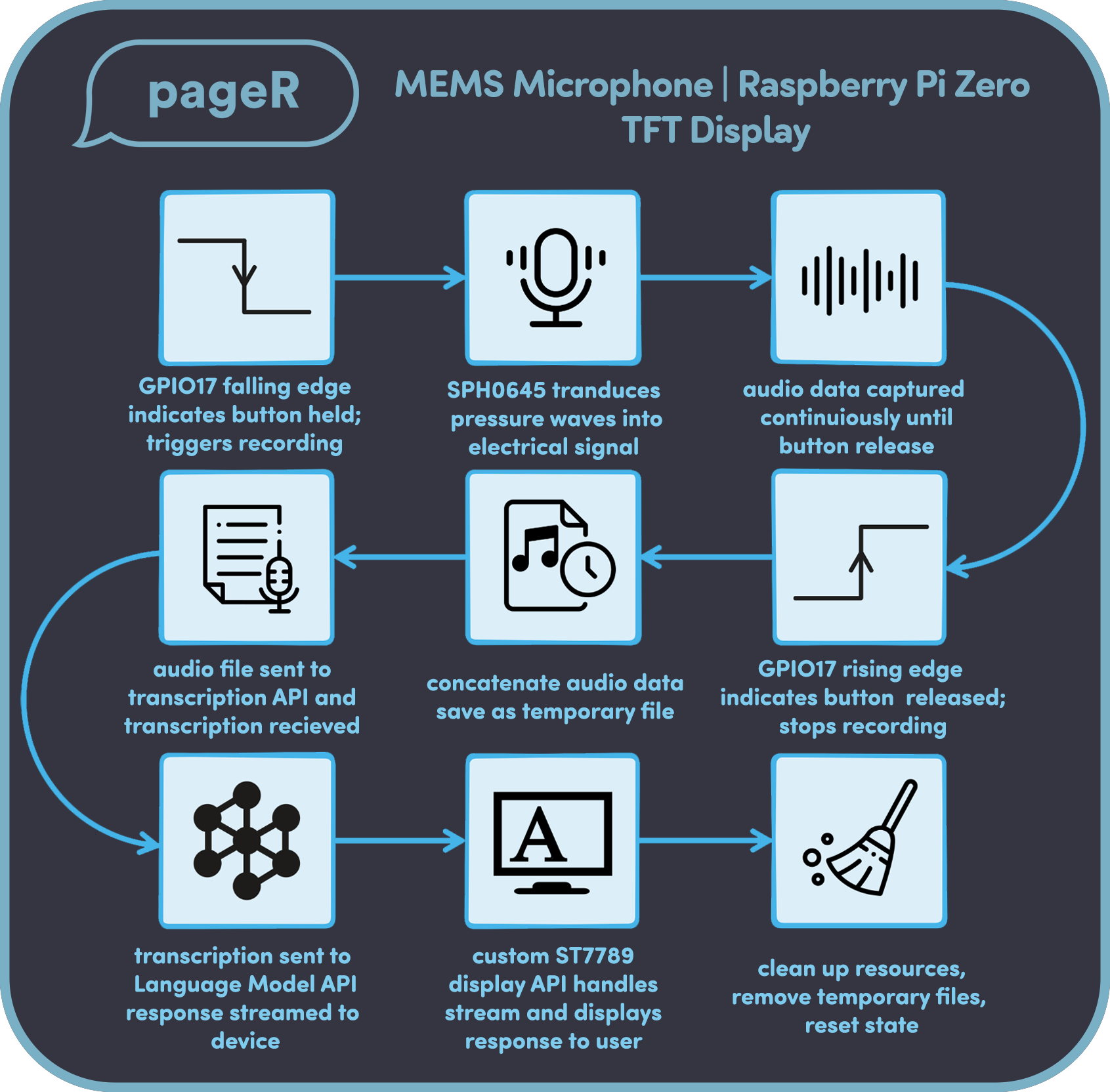

Pager 2023

Pager 2023

Part of the GRiOT hardware ecosystem, pageR is a handheld Natural Language Interface.

Built with a Raspberry Pi Zero 2 W, MEMS Microphone, TFT Display and Custom rotary encoder assembly. pageR features push-to-talk functionality to facilitate communcation with an LLM.

Running off a single python file with some custom modules, pageR is a proof-of-concept for the Natural Language Human Input Device project.

Final Concept Model

Functional Prototype

Process Flow

Preliminary Designs

Initial Prototype

Pager Render

Experimenting with running Raspberry Pi OS

🌐 vidalion.co 2023

The website you're currently on!

This site is self-hosted on a Google Cloud VM running Ubuntu Linux and has provided me with ample oppertunity to develop my network management skills. The server also acts as a sandbox for some of my web-based experiments.

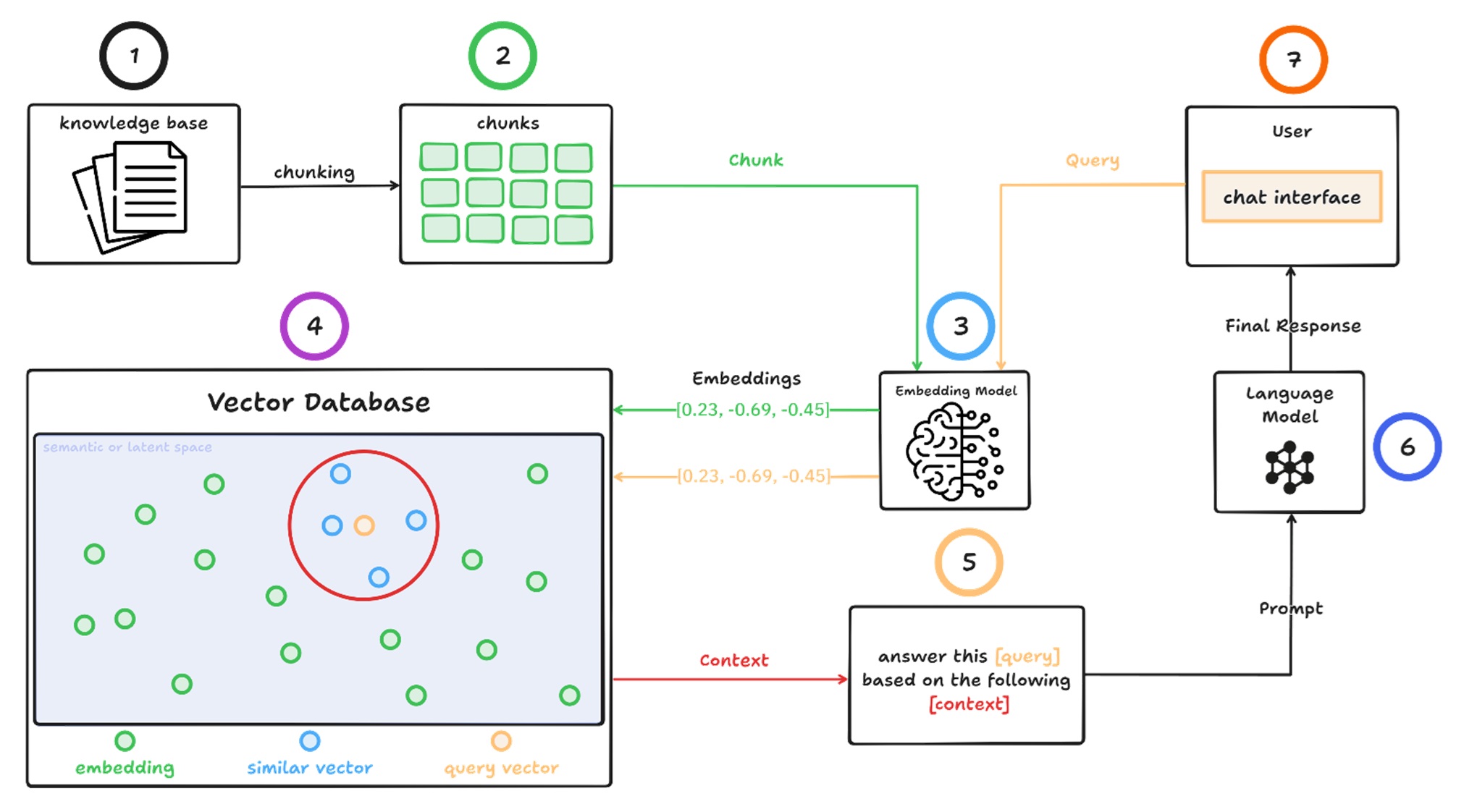

Facinated by the utility of Retrival Augmented Generation (RAG) - I aimed to integrate it into a project. Giving a language model the tools to become my "personal pr agency".

RAG Illustration [source]

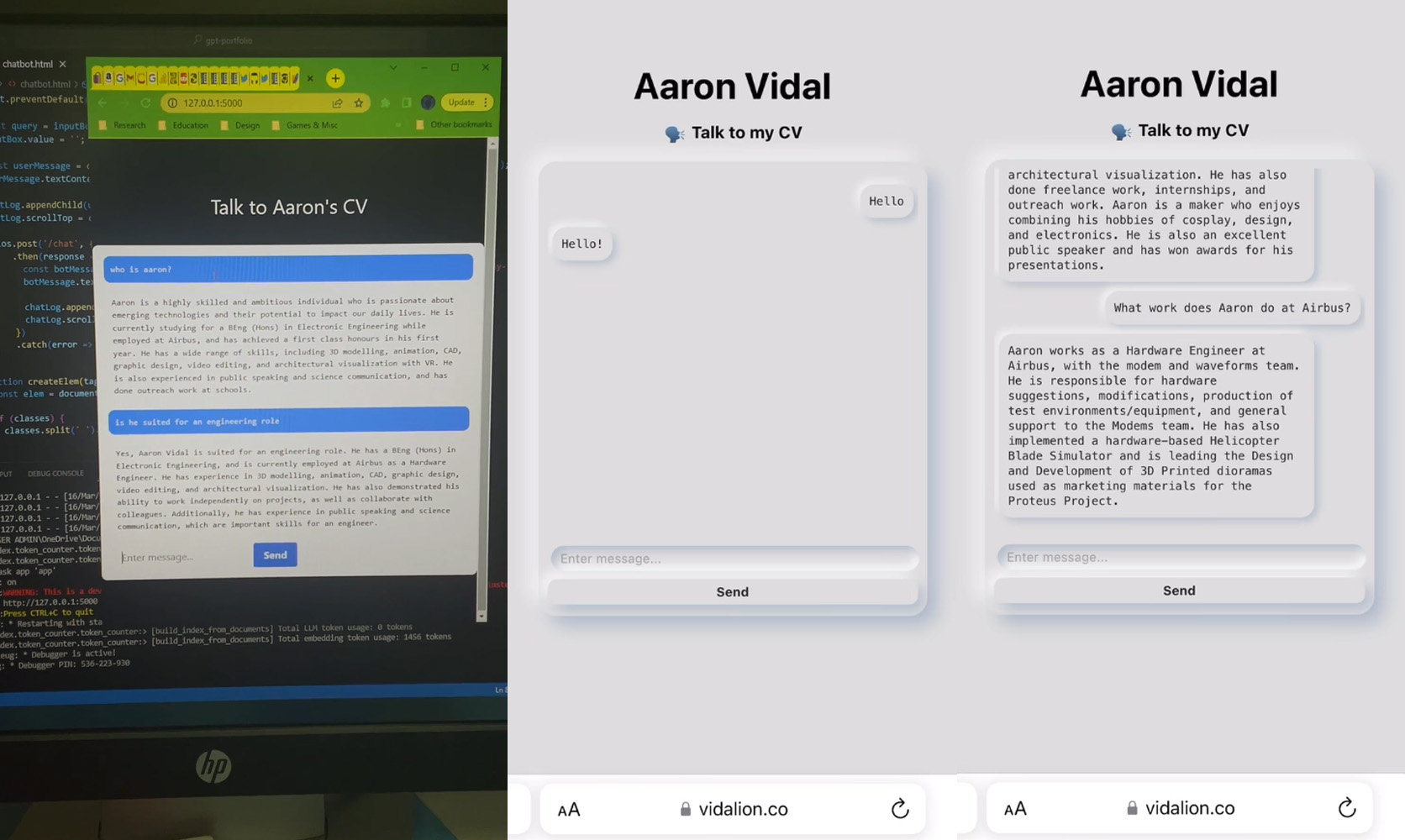

The intial iteration of the chatbot used the text embeddings of my CV in addition to llama index's RAG framework to serve answers to queries about myself. The web design following a neuromorphic style I maintain an affinity towards.

Website Design Development

Following a versioning error, I "learned through doing" the importance of developing using virtual environments and creating a requirements.txt file. This pause inspired me to develop my own framework using the OpenAI Assistants API. Moving towards a skumorphic bash terminal inspired design for the chatbotthe chatbot.

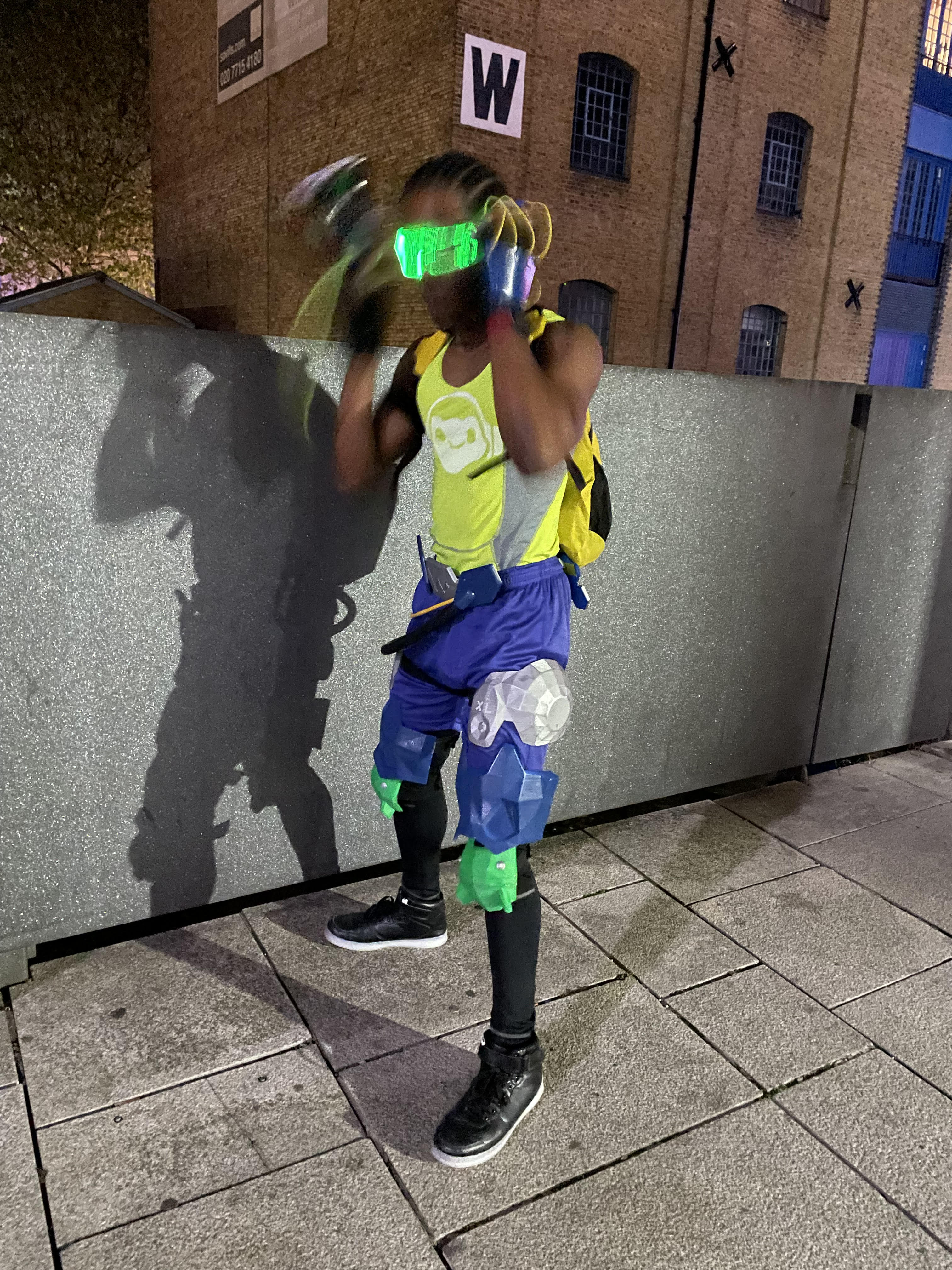

🐸 Lucio v1 2019 | v2 2022

I'm a huge fan of a game called Overwatch and following the creation of Visor (inspired by the character Lucio) I set out to create the full cosplay from scratch. Using a mixed medium of 3D printing, textiles, and laser cutting.

Version One of the cosplay was completed in 2019 just in time for London Comic Con. Version Two was completed in April 2022 for Portsmouth Comic Con and worn at the London Comic Con of that same year (and Halloween too, had to make the most of it 😅).

With the release of Overwatch 2, there may be a v3 in the very near future.

MCM Comic Con London 2019

Portsmouth Comic Con 2022

London Comic Con 2022

MCM Comic Con London 2019

MCM Comic Con London 2019

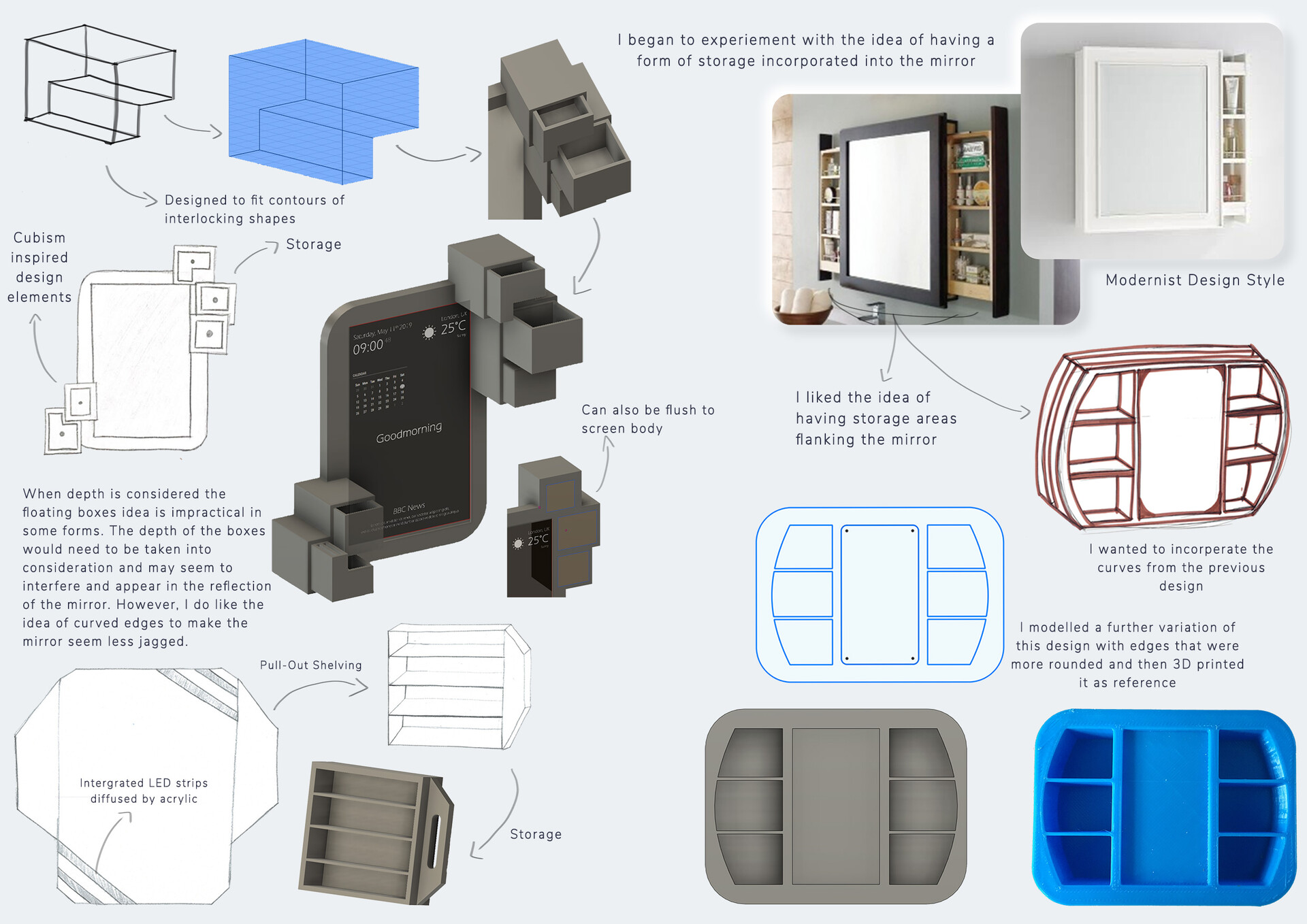

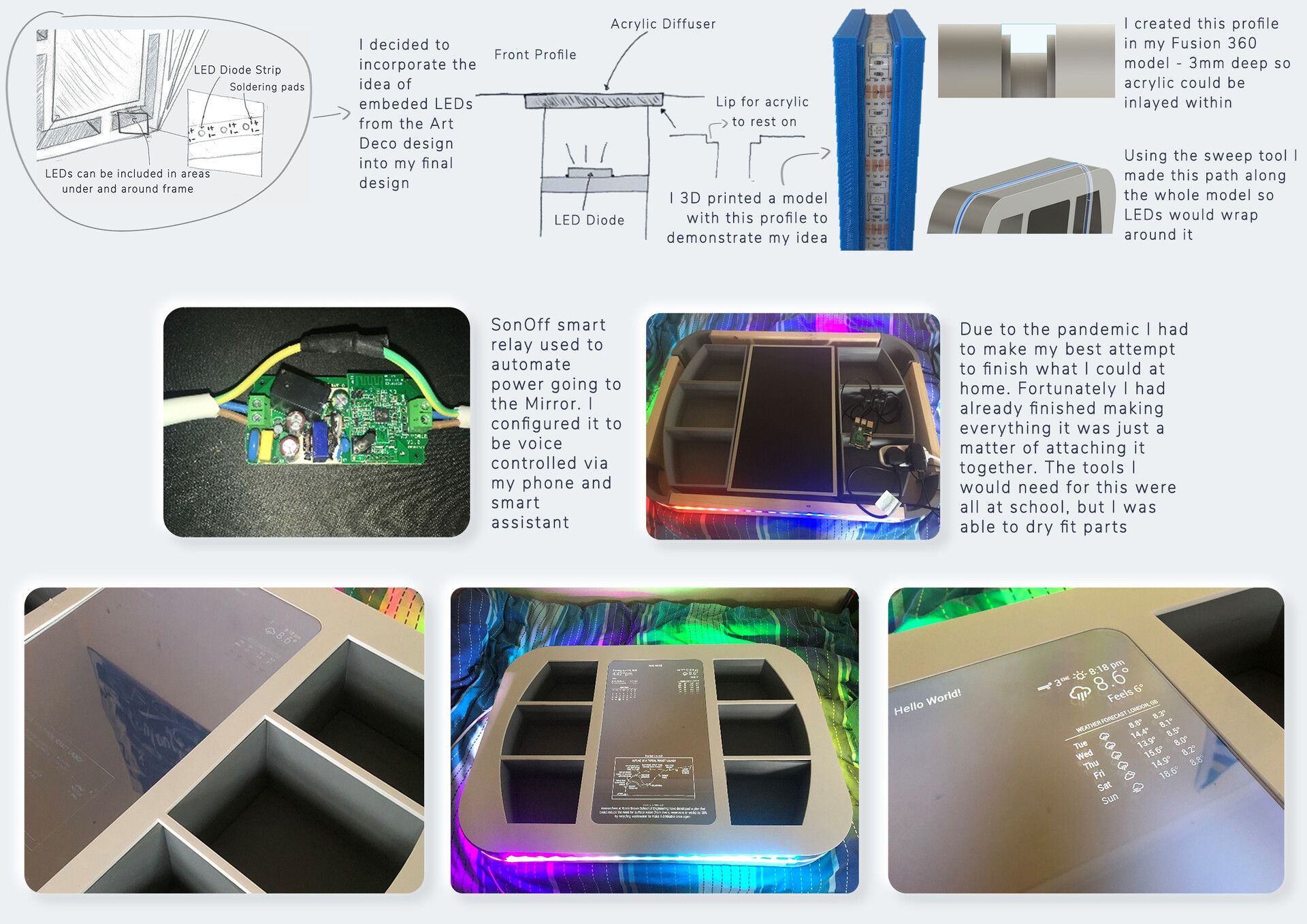

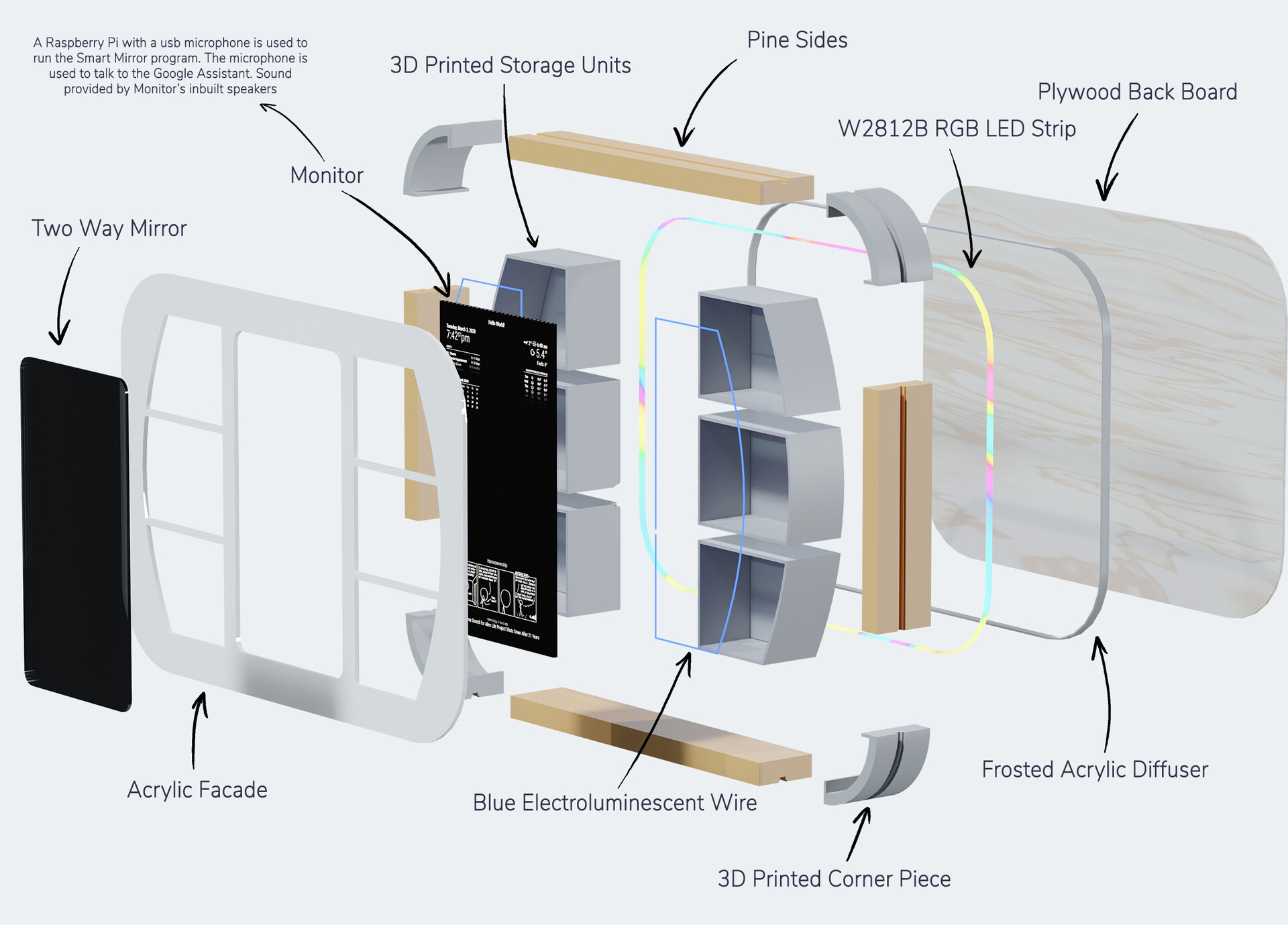

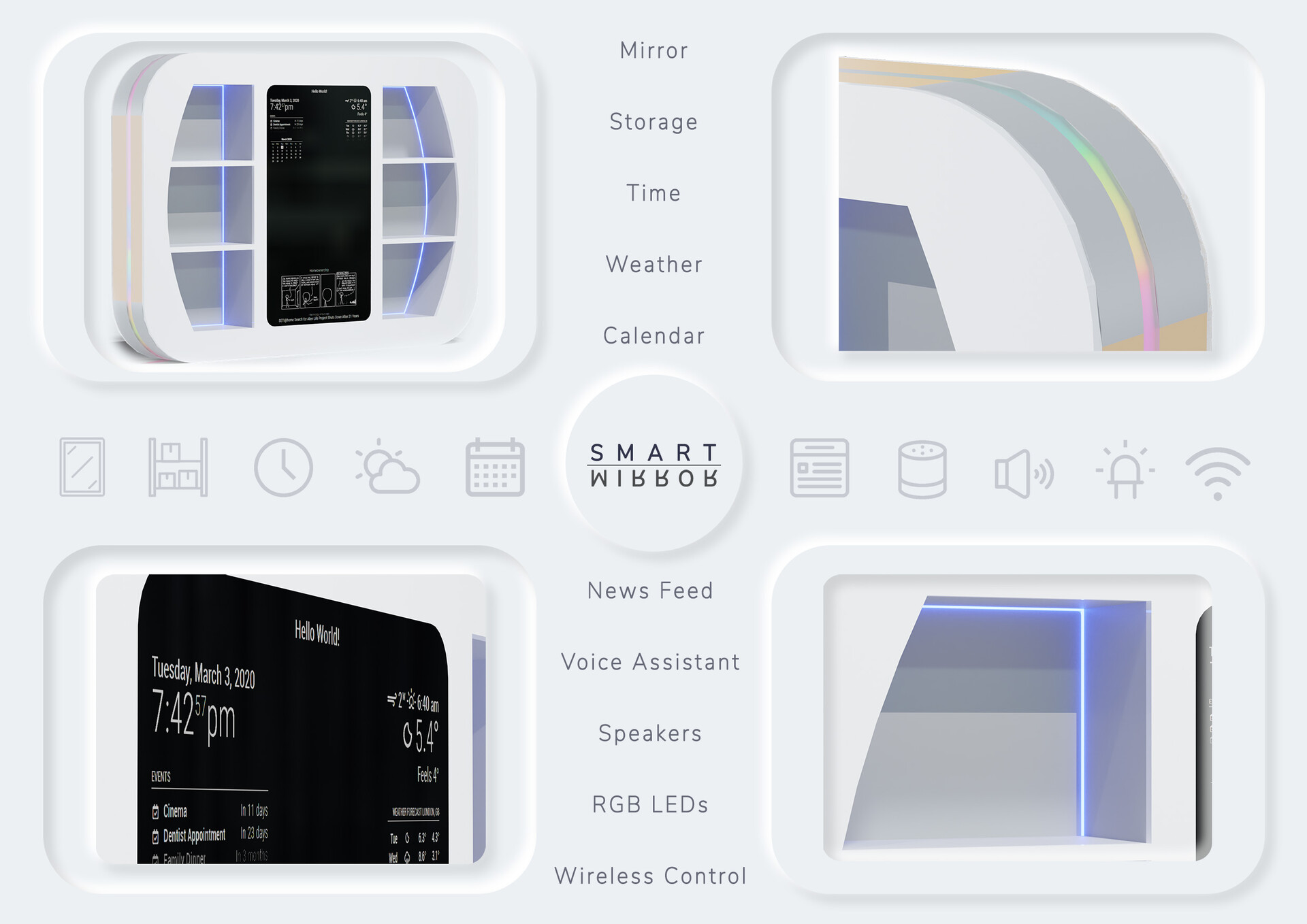

🖥️ Smart Mirror 2020

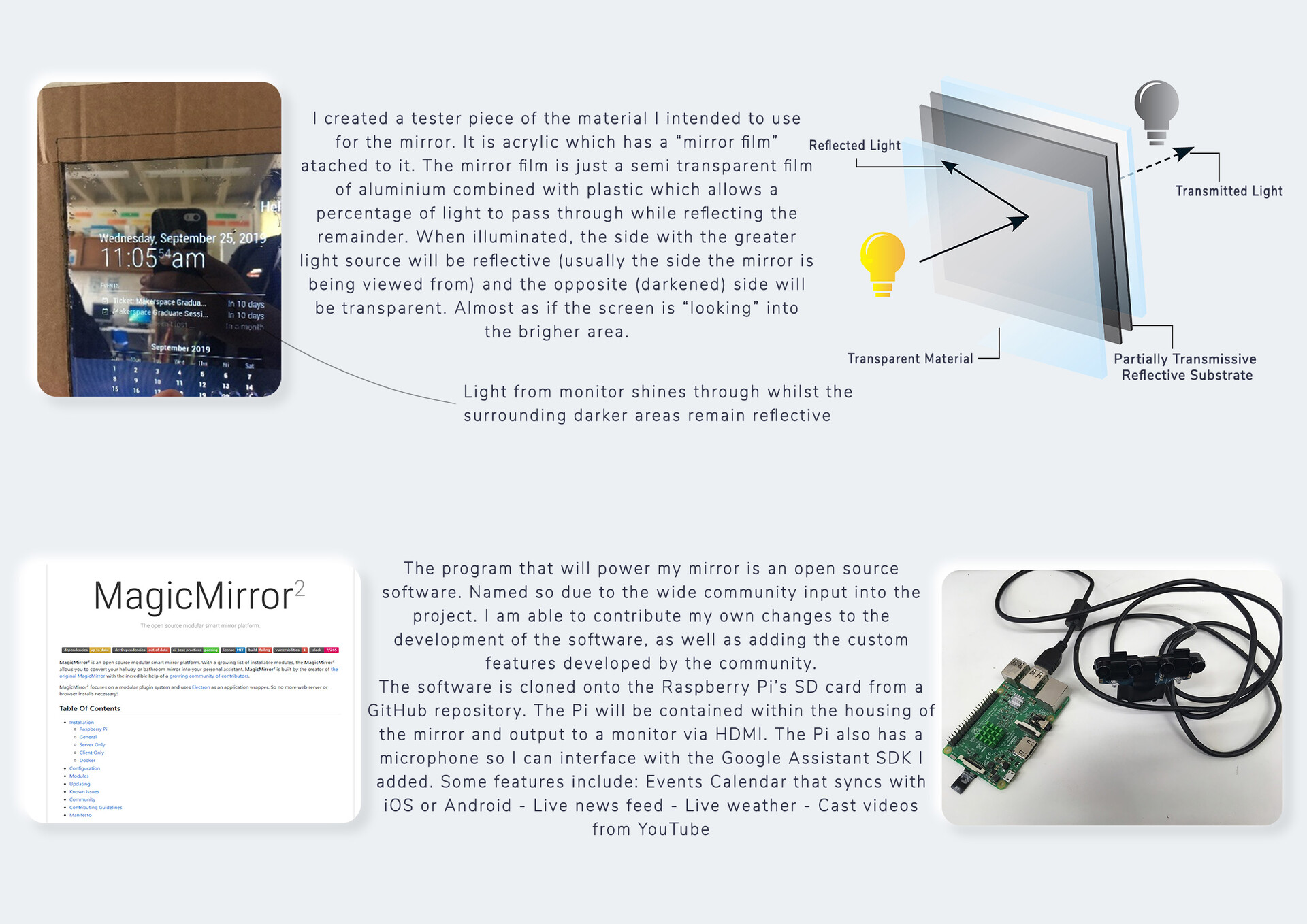

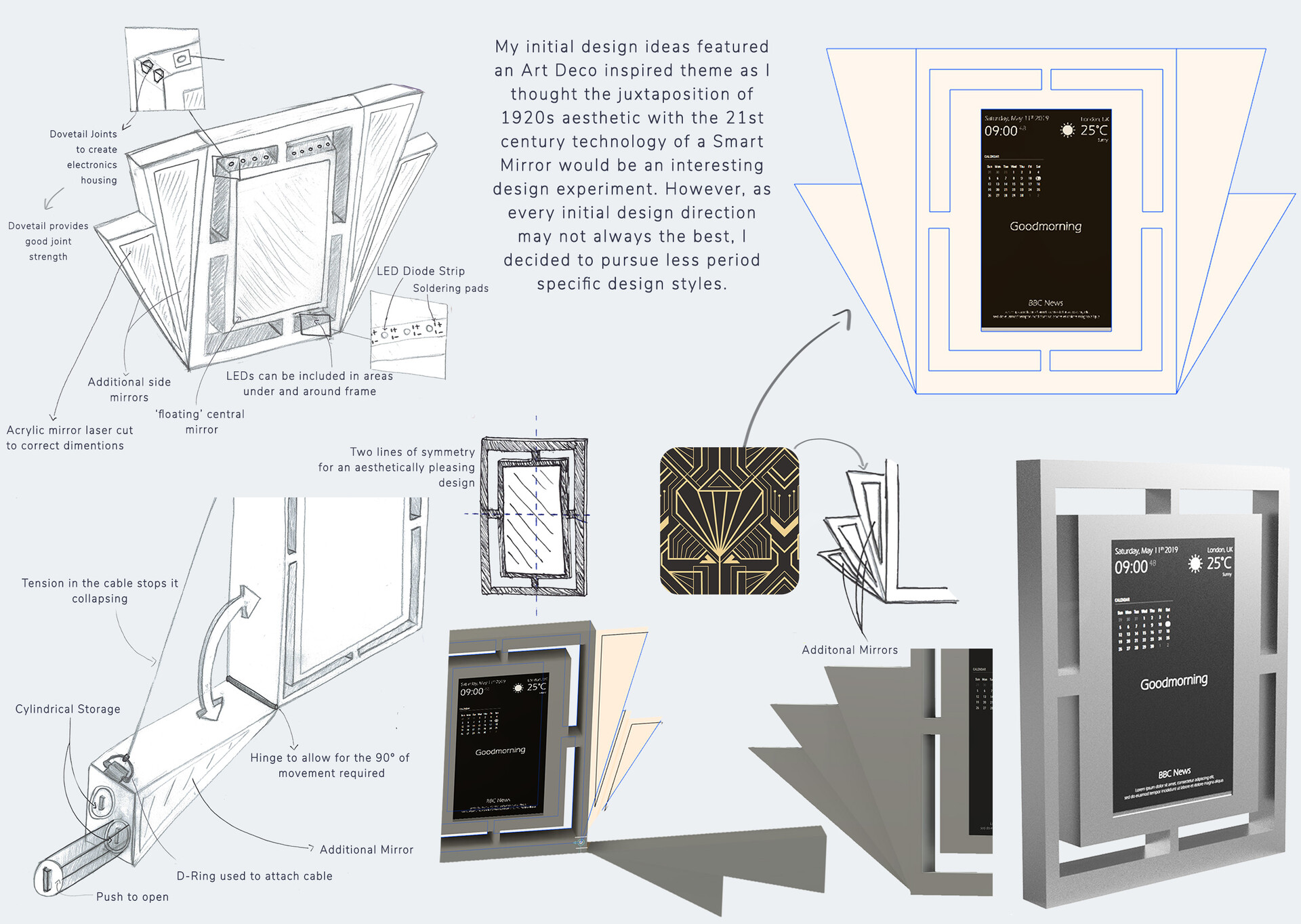

For my A-Level Product Design coursework, I created a Smart Mirror with a Raspberry Pi 3B+ and a two-way mirror.

My aim was to integrate interactive technology into home decor, in this case a shelving unit. Making use of the open-source MagicMirror2 software, displaying the time, date, weather, and news headlines - utilising various API.

This project was a continuation of my exploration into IoT and embedded computing. Including use of laser cutting, woodworking, 3d printing and electronics.

🔧 Imperial College Maker Challenge 2019

During my time at Imperial College's Maker Challenge, a 12-week programme at the Reach Out Makerspace in White City, I developed "Visor" - a head-mounted display inspired by the character Lucio from Overwatch. The project combined design, prototyping, electronics, and embedded computing.

Using Fusion 360 for CAD design, I created custom 3D printed components while also repurposing salvaged electronics - combining a hacked pair of headphones with a Bluetooth module. I integrated Amazon Alexa functionality using a Raspberry Pi for a works-like model, building upon my previous experience with the SDK.

Visor went on to win first place in the 7th Maker Challenge competition, judged by a panel of industry experts. The experience provided invaluable hands-on exposure to the design engineering process.

🔈 Alexa SDK Implementation 2017

After a week of trial and error, scouring out-of-date blog posts and seeking help from online communities I implemented the Amazon Alexa Software Developer Kit (SDK) on a Raspberry Pi 3B+

This project solidified the tenacious attitude I continue to apply to my creative endevours: A solution is always out there, you just have to look for it.

Alexa SDK Demo (featuring excited camera shake)

Me (15) and the Head of Southampton University Computing and Electronics Department. I attended an open day (maybe a few years early) and brought the Raspberry Pi with me. Connected it to my phone's hotspot in order to demo it.

🤖 Imperial College Robotics Competition 2012

During the summer of 2012 to the backdrop of the London Games I partook in a week-long robotics course hosted at Imperial College London. We spent the week learning about the VEX robotics platform and created a remote-controlled robot to compete in a robot football tournament where you scored points by moving balls into a net or over the outside of the court.

Although I was the youngest member of the course at age 10, I worked closely with my team to develop a forklift-style bucket for lifting balls, which played a key role in our team's victory.

Pit Stop

Robot in Action

🔨 The Decking 2003

Technically my first project.

I aided my dad in building the decking in our garden.

🏆 Honors & Awards

Airbus Scholar

awarded a place on highly competitive degree apprenticeship

First Place @ Imperial College Maker Challenge 2019

🧭 Explorations

📸 Photography

I was drawn to the tactile, analogue nature of film photography after borrowing my dad's old film camera, a Chinon CE-5.

Low friction interfaces have abstracted so much away (for the sake of UX) that technology can often feel like a “black box”. Tactile experiences bring more meaningful interactions with technology - something I discussed at length in chapter 1.2 of my undergraduate dissertation.

⚡ Electronics

💻 Computer Graphics more work on my artstation

🏋🏾♀️ Fitness

"It is a shame for one to grow old, without seeing the beauty and strength of which their body is capable" - Socrates

i vehemently deny any and all gym bro accusations

✉️ aaron@vidalion.co